Sensitivity vs. Specificity: The Golden Keys to Accurate Veterinary Diagnostics

In the field of veterinary diagnostic reagents, sensitivity and specificity are known as the "golden parameters" that determine the accuracy of detection, directly impacting the efficiency of animal disease prevention and control as well as breeding benefits. Today, let's take an in-depth look at these two core indicators.

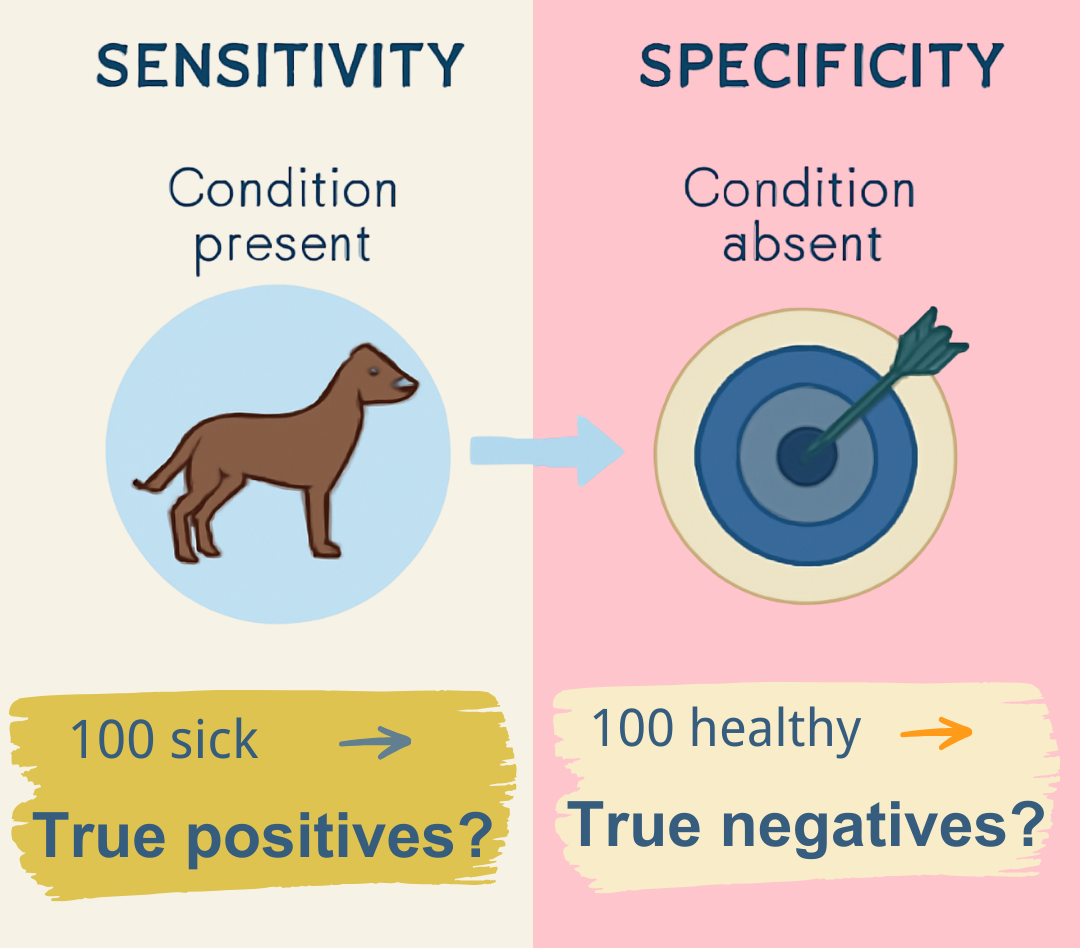

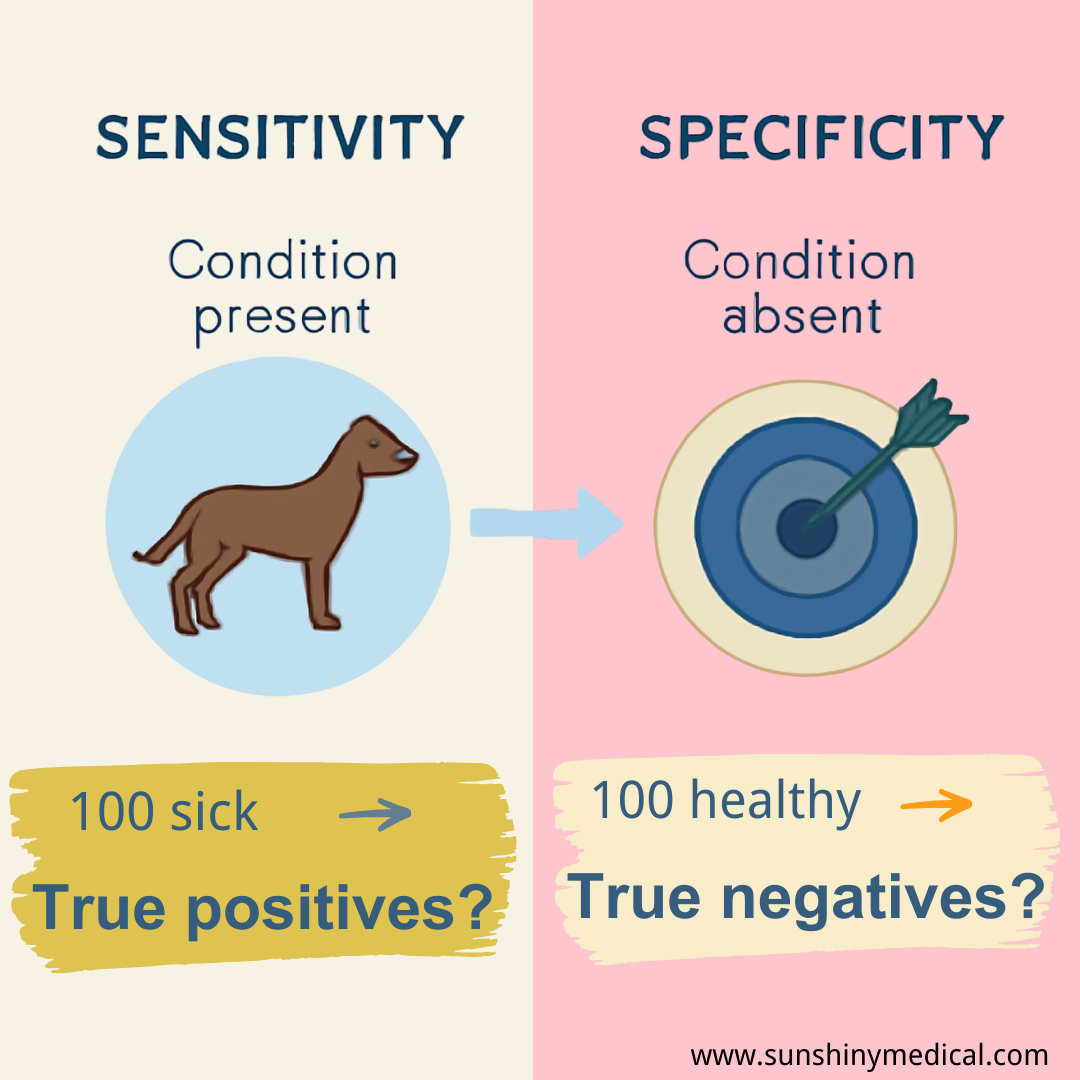

Sensitivity: Never Miss a "True

Positive"

Sensitivity measures the reagent's ability to identify diseased animals, also

known as the "true positive rate." For instance, if a reagent has a

sensitivity of 95%, it means that out of 100 infected animals, it can detect 95

of them, with only a 5% risk of missed detection. Highly sensitive reagents are

crucial for early disease screening, especially for rapidly spreading severe

infectious diseases like African swine fever and avian influenza. Early

detection is key to quickly breaking the transmission chain.

Specificity: Don't Misdiagnose Any

"Healthy" Animals

Specificity focuses on the reagent's "precision strike" capability,

that is, the probability of avoiding misclassifying healthy animals as

positive. Assuming a specificity of 98%, it means that in the testing of every

100 healthy animals, there will be only 2 false positives. In high-demand

scenarios such as breeding livestock quarantine and export animal inspection,

high specificity can significantly reduce economic losses caused by

misdiagnosis.

We aim for high sensitivity and specificity, but often must balance them. Like COVID tests prioritized sensitivity early (to catch cases) then specificity later (to reduce false positives), veterinary diagnostics emphasize sensitivity during outbreaks and specificity in routine monitoring.